How to Build Machine Learning Models for Data Science: A Step-by-Step Guide

Guide to Building Machine Learning Models for Data Science

4 Jun 2025, 3:21 pm

Machine learning (ML) is an absolute necessity for data driven, decision making and allows businesses to solve real world problems such as fraud detection, customer predictions and process optimization. ML models for data science help discover hidden patterns that lead to smarter insights.

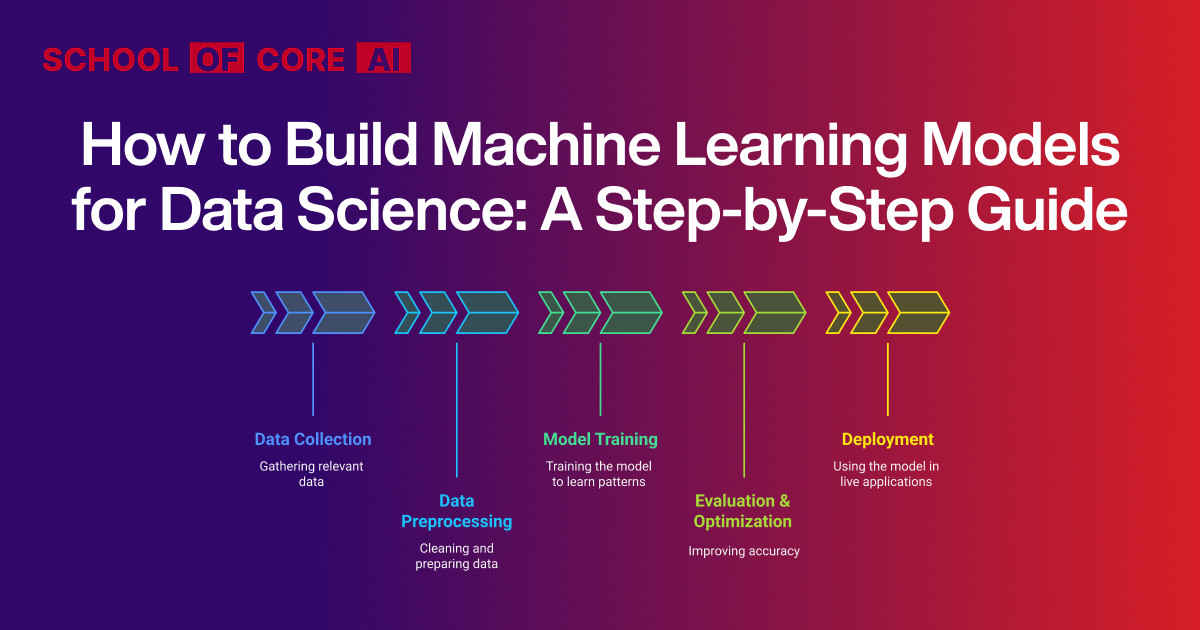

Effective models are built through several key steps:

- Data Collection - Gathering relevant data.

- Data Preprocessing - Cleaning and preparing data.

- Model Training - Training the model to learn to recognize patterns.

- Evaluation & Optimization - Improvement in Accuracy.

- Deployment - Using the model in live applications.

This page guides you through these steps using an example of training a regression model. In addition, I'll explain AI workflows on how they will simplify the entire process of efficiently training models and their deployment. This is aimed at helping both novices and experts follow a systematic approach to ML models for data science.

What Are ML Models for Data Science?

Machine learning models for data science are essentially designed algorithms that are programmed to detect patterns in data and make decisions or predictions without having been programmed directly. Data science uses these models to automate processes, streamline procedures and clean insights from massive datasets.

There are three types of ML models for data science:

- Supervised learning: This is an approach that learns through tagged data for the purposes of prediction. Classic examples include sales forecasting through regression and spam email detection with classification.

- Unsupervised Learning: Identifies patterns in unstamped data, which can be used for grouping or detecting anomalies such as customer segmentation.

- Reinforcement Learning: A model learns by trial and error in order to get the best possible reward most common in robotics and gaming AI applications.

Some real world applications of ML models in data science include using fraud detection in banking, predictive maintenance in manufacturing and personalized recommendations in e-commerce. These models will help businesses make smarter decisions using data quite efficiently.

Step 1: Data Collection and Preparation

1.1 Gathering the Right Data

The success of ML models in data science completely relies on how well the collected data is qualitative and relevant in training the models. Inaccurate predictions and results that cannot be trusted can ensue if a bad or unfair source of data is used in the process.

The sources of the data are from the following

- Public Datasets: Kaggle, UCI Machine Learning Repository, Google Dataset; Search free datasets to be worked on by the ML project.

- APIs: Such as Twitter and OpenWeather for real time and any finance market data supplier for obtaining real time finance market data.

- Company Databases: Companies use internal databases and CRM systems for company specific data and IoT sensors.

Quality data ensures that the flows of AI work well and that models can make good generalizations to new unseen data. Proper data collection is the base for creating accurate and effective machine learning models for data science.

1.2 Data Cleaning and Preprocessing

Once data has been collected, cleaning and data preparation are fundamental aspects of successful ML model developments in data science. Poor data quality can sometimes lead to bias predictions and a low efficiency within the AI workflows.

Key aspects in data pre-processing include:-

- Handling missing values: replacing the gaps via mean, median or through interpolation methods as this prevents losses of data.

- Remove Outliers and Duplicates: Find out and remove extreme values as well as duplicate records for better modeling.

- Feature selection and engineering: Features are selected relevant to the topic and new features are created based on existing features for improving predictability.

- Scaling and normalization: Scaling the numerical data such that its distribution of the weights is made evenly during model training and it improves performance.

Very effectively, proper preprocessing of data aids in optimization towards the AI workflow and makes feasible reliable predictions coming from ML models for data science.

Step 2: Choosing the Right ML Model

The right model is required for the successful construction of ML models in data science. The model selected varies with the nature of the data and problem to be addressed. This is a comparison of some of the major types of ML models:

- Regression Models: This model is used for predicting continuous values, such as sales forecasting.

- Classification Models: It will be used in classification models that classify data into different labels which can include spam detection and fraud detection.

- Clustering models: To discover the latent patterns of the hidden data such as customer segmentation based on k-means.

- Deep Learning Models: Deal with complex patterns in big datasets, can be used for image recognition and natural language processing.

The best model then has to be matched with further critical factors that relate to the size of data, feature complexity and prediction goals. A well matched model will ensure efficient AI workflows and optimized model training that renders accurate results.

Step 3: Model Training and Evaluation

3.1 Splitting Data into Training and Test Sets

The dataset needs to be split into separate training and test sets so that the ML models for data science perform well on new data. This would allow the model to be tested for generalization ability and avoid overfitting.

- Train-Test Split: A common approach is the 80/20 rule, where 80% of the data is used for model training and 20% is reserved for testing.

- Cross-validation: Instead of splitting the data into one split, cross-validation techniques such as k-fold validation divide the data into multiple subsets to train and test the model multiple times, thus improving reliability.

With these techniques businesses can build robust ML models that perform well in real world applications, thus ensuring efficient AI workflows and accurate predictions.

3.2 Training ML Models for Data Science

In data science training of an ML model means feeding it data to learn from it, finding patterns and predicting outputs. Here, one can code with the help of libraries like Scikit-Learn or TensorFlow and training a model in this process. Example:Training Regression Model with Scikit-Learn.

Step 4: Model Evaluation and Optimization

4.1 Measuring Model Performance

After training a model its performance must be evaluated to be satisfied with the fact that it is making reliable predictions.The choice of evaluation metric depends on the type of model, such as classification or regression.

Accuracy, Precision, Recall are essentially used as metrics for classification models. Accuracy measures the overall correctness, while precision and recall focus on false positives and false negatives.

- RMSE (Root Mean Squared Error) (Regression Models): It is a popular metric for regression models representing the average difference between the estimated and actual values.

- Mean Squared Error (MSE): This would be the average of the squared differences between predicted and actual values, with the lower value indicating a better performance.

4.2 Optimizing Model Performance

Model optimization is one of the crucial steps to make sure that the ML models used for data science provide the best possible predictions. This includes hyperparameter fine-tuning, automating processes and improving features used in training.

- Hyperparameter Tuning: Hyperparameters govern the training process and fine-tuning can greatly improve the accuracy of a model. Techniques like Grid Search and Random Search are used to find the best hyperparameters by testing various combinations.

- Grid Search: It searches the entire given hyperparameter set.

- Random Search: Randomly samples the parameter space, then evaluates the random combinations generated; this is much faster than the grid search.

- AI Workflows for Hyperparameter Selection: AI workflows in hyperparameter selection automate the tuning of hyperparameters, which in turn allows better efficiency over time, saving time and resources since optimal configurations are automatically found.

- Feature Importance and Engineering: It can guide feature selection and engineering by identifying the features that most contribute to the model's performance. By focusing on the most important features or creating new, more relevant ones, the accuracy of the model can be improved.

Optimizing ML models for data science through these techniques ensures that your models are ready for deployment, offering precise and reliable results for real world application.

Step 5: Deploying Your ML Model

5.1 Converting ML Models into APIs

After you train an ML model for data science, you want to deploy that model so it can be utilized in real time applications. Efficient ways of deployment are through API conversion for immediate serving of predictions.

- Exporting Trained Models:First off, save your trained model via serialization libraries, such as Pickle or ONNX. if you're exporting it for real time application purposes.

- Pickle: A Python library that lets you serialize objects, including machine learning models, into files that can be reloaded and used later.

- ONNX (Open Neural Network Exchange): A format that lets you export models from various ML frameworks (like PyTorch, TensorFlow) and deploy them across different platforms.

- Building an API with Flask or FastAPI:With an exported model, you could use web frameworks like Flask or FastAPI in order to implement a simple RESTful API for serving the predictions. An API would then receive input data and feed that input data through your trained model returning a predicted output.

5.2 Using AI Workflows for Model Deployment

Deployment of ML models has to be efficient in data science by deploying on cloud platforms and automation. With the scalability, reliability and continuous improvement that are ensured by AI workflows; thereby streamlining the deployment process, automating model updates and integrating well with applications.

Cloud Deployment Platforms:

- AWS SageMaker: Scalable training, deployment and monitoring of ML models

- Google AI Platform: Provides hosting for cloud based models and prediction services.

- Azure Machine Learning: Provides an “end-to-end” solution for deploying and managing models.

Automating Updates and Retraining:

Artificial intelligence workflows will automatically update models based on new data being monitored periodically, so that models become updated periodically and deployed in new versions. This prevents the deviation of models over time.

Without the need for manual intervention businesses can use cloud services and AI workflows efficiently to deploy, scale and maintain ML models for data science, making them and available for real-world applications.

Common Challenges in Building ML Models for Data Science

1. Overfitting & Underfitting

One of the major issues in ML models for data science is overfitting and underfitting. It is the ability to prevent a model from becoming too simple and from overfitting to noise rather than patterns.

How to Avoid Overfitting:

- Regularization (L1/L2): The model cannot give too much weight to specific features.

- Dropout Layers : This is used for Neural Networks. During the training, it randomly turns off neurons to enhance generalization.

- Cross-validation: This is a technique used in the train stage. It splits the datasets to assess the performance or evaluation of models on unseen data.

2. Data Bias and Ethics

Biased data sets could lead to biased inference output especially in sensitive tasks like hiring, lending and healthcare.

How to Overcome Data Bias:

- Data Diversification and Representatives: The data training set must represent all groups justly.

- Bias Detection Metrics: Implement fair aware algorithms to identify bias in the inference predictions.

- Ethics for AI: Lead your AI practices responsibly to avoid discrimination.

3. Scaling ML Models for Real-World Applications

Efficiency and flexibility are the necessities for deploying ML models at scale. Cloud based AI workflows provide the infrastructure needed for scaleless deployment.

Best Practices on Scaling Models:

- Use Cloud Services: Systems, such as AWS SageMaker, Google AI Platform and Azure ML, handle large scale deployments.

- Batch Processing and Streaming: Data processing is efficiently done using batch jobs or real time streaming solutions.

- Model Optimization: Techniques such as quantization and model compression reduce the computational costs.

These challenges will then enable businesses to develop accurate and fair ML models that are scalable for practical usage in the real world.

Conclusion

- Constructing ML models for data science requires structured steps- starting from the collection and preprocessing of data, then choosing the appropriate model, training, evaluation and deployment.

- High-quality data is essential to get accurate results, but the choice between regression, classification, clustering or deep learning depends on the problem under consideration.

- Optimization through hyperparameter tuning and feature engineering improves the performance, while deployment using cloud platforms and AI workflows scale appropriately.

- The only way to really improve predictions and efficiency is by continuously experimenting with different models and automated AI workflows. To start with, try building and training a regression model on a simple dataset today.